Software Services

For Companies

Products

Build AI Agents

Portfolio

Build With Us

Build With Us

Our

Usa

Help Build/

01010100101010101010101

01011010101010101010101010101010

01010101010101001010110101010101010101010

1010101010010101011101101010101011001010101010

101010101010101010101101010101000110011010010110101010

10101101010101010110101010101010101011010101111101010101010101010

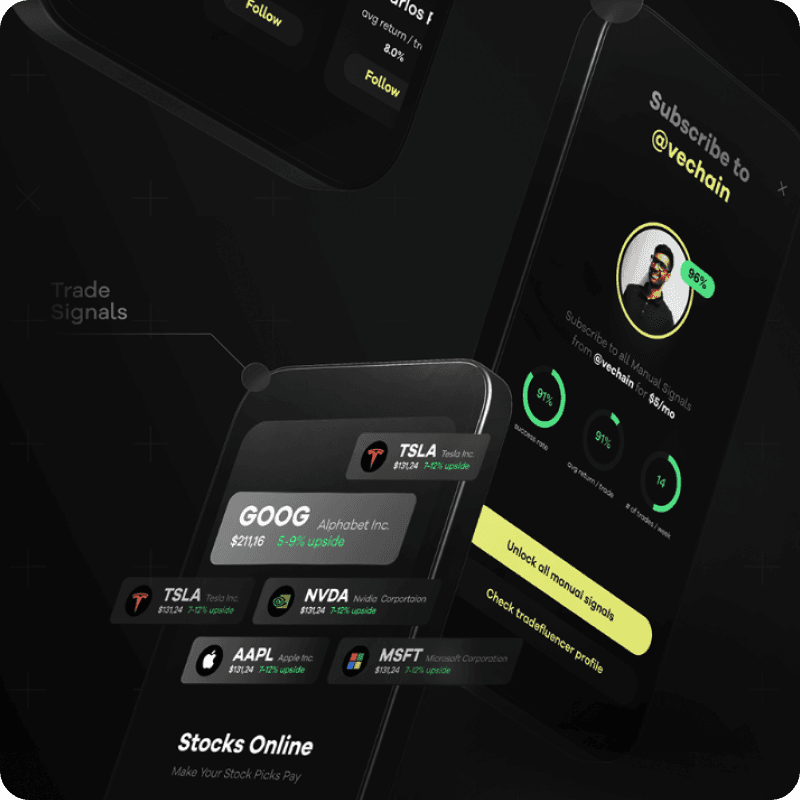

Case Studies/

A Global Network Of Engineering Talent/

Our Developers Have Worked At Companies Like:

We've shipped production software for startups racing to launch and enterprises that can't afford downtime. Our developers architect solutions that scale, ship features users actually want, and deliver applications that define what's possible.

Every engineer brings 5-10+ years shipping real products. Every project gets the execution quality that separates market leaders from everyone else. We're building your unfair advantage.

5+ Years Professional Experience

Senior engineers who've shipped at scale and know what works in production.

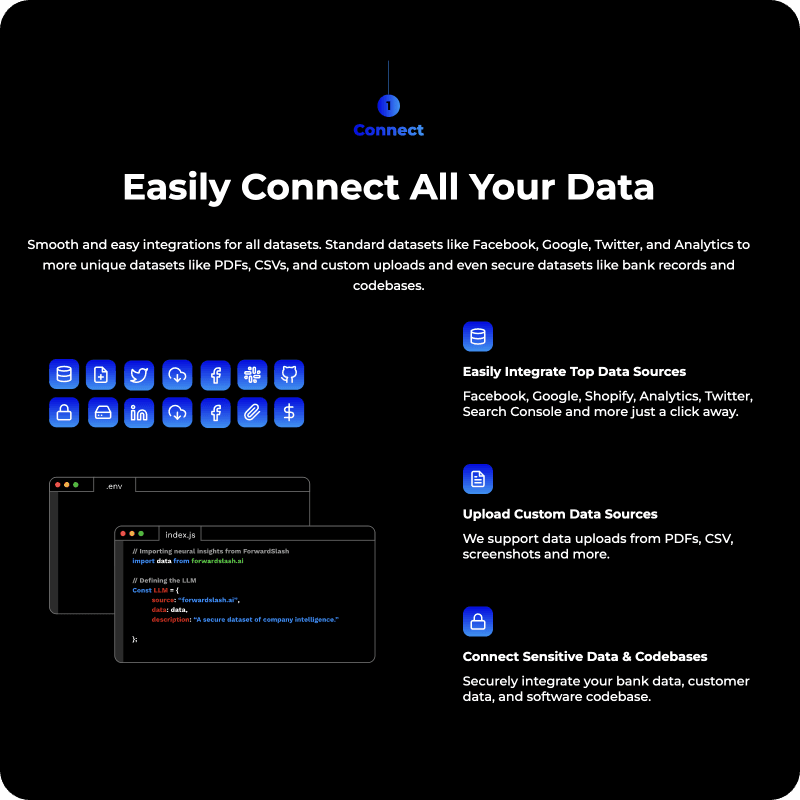

200+ Skills Across Every Stack

CRMs, e-commerce, mobile apps, AI workflows—we've built it all in production.